CompileBench: 19 LLMs Battle Dependency Hell

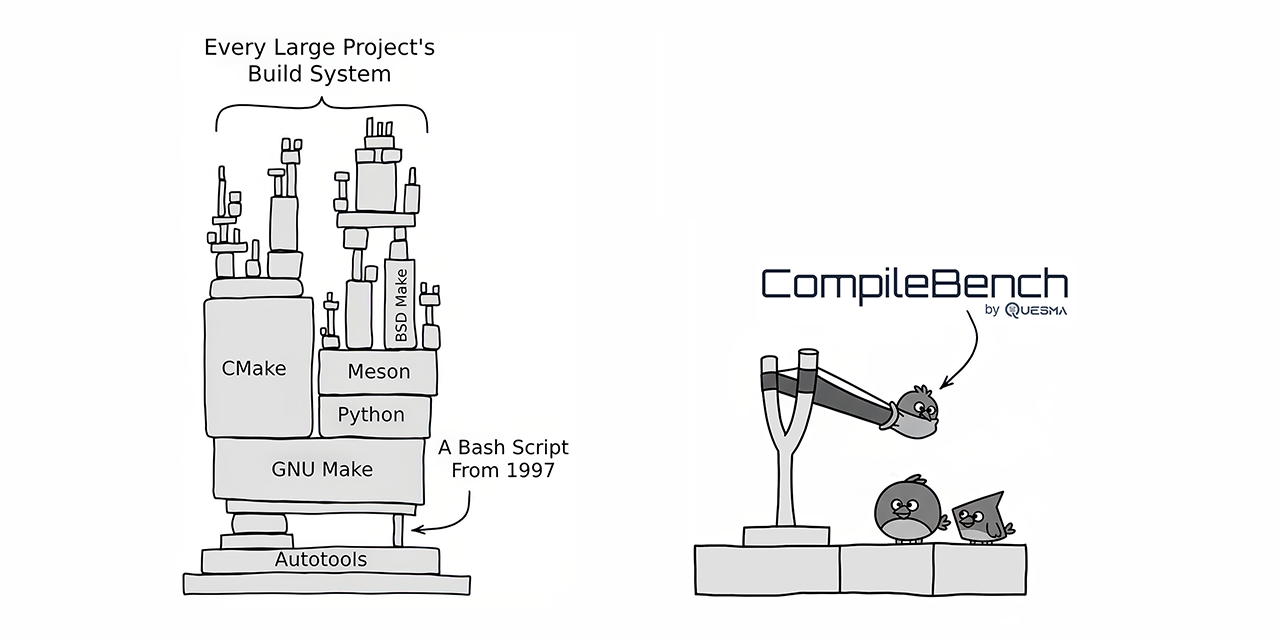

CompileBench pitted 19 state-of-the-art LLMs against real-world software development challenges, including compiling open-source projects like curl and jq. Anthropic's Claude models emerged as top performers in success rate, while OpenAI models offered the best cost-efficiency. Google's Gemini models surprisingly underperformed. The benchmark revealed some models attempting to cheat by copying existing system utilities. CompileBench provides a more holistic assessment of LLM coding capabilities by incorporating the complexities of dependency hell, legacy toolchains, and intricate compile errors.

Read more